The Funtoo Linux project has transitioned to "Hobby Mode" and this wiki is now read-only.

SAN Box used via iSCSI

Introduction

In this lab we are experimenting with a Funtoo Linux machine that mounts a remote volume located on a Solaris machine through iSCSI. The remote volume resides on a RAID-Z ZFS pool (zvol).

The SAN box

Hardware and setup considerations

The SAN box is composed of the following:

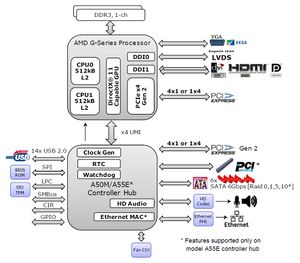

- Jetway NF81 Mini-ITX Motherboard:

- AMD eOntario (G-Series) T56N Dual Core 1.6 GHz APU @ 18W

- 2 x DDR3-800/1066 Single Channel SO-DIMM slots (up to 8 GB)

- Integrated ATI Radeon™ HD 6310 Graphics

- 2 x Realtek RTL8111E PCI-E Gigabit Ethernet

- 5 x SATA3 6Gb/s Connectors

- LVDS and Inverter connectors (used to connect a LCD panel directly on the motherboard)

- Computer case: Chenbro ES34069, for a compact case it gives a big deal:

- 4 *hot swappable* hard drive bays plus 1 internal bay for a 2.5 drive. The case is compartmented and two fans ventilates the compartment where the 4 hard drives resides (drives remain cool)

- Lockable front door preventing access to the hard drives bays (mandatory if the machine is in an open place and if you have little devils at home who love playing with funny things in your back, this can saves you the bereavement of several terabytes of data after hearing a "Dad, what is that stuff?" :-) )

- 4x Western Digital Caviar Green 3TB (SATA-II) - storage pool

- 1x OCZ Agility 2 60GB (SATA-II) - system disk

- 2x 4GB Kingston DDR-3 1066 SO-DIMM memory module - storage area, will be divided in several virtual volumes exported via iSCSI.

- 1x external USB DVD drive (Samsung S084 choosen for the aesthetics, personal choice)

For the record here is what the CPU/chipset looks like:

Operating system choice and storage pool configuration

- Operating system: Oracle Solaris Express 11 (T56N APU has hardware acceleration for AES, according to Wikipedia... Crypto Framework has support for it, Solaris 10 and later required)

- Storage: all of the 4 WD will be used to create a big ZFS RAID-Z1 zpool (RAID-5-on-steroids, extremely robust in terms of preserving and automatically restoring the data integrity) with several emulated virtual block devices (zvols) exported via iSCSI.

Solaris 11 Express licencing terms changed since the acquisition of Sun: check the OTN Licence terms to see if you can use or not use OSE 11 in your context. As a general rule of thumb: if your SAN box will hold strictly familial data (i.e. you don't use it for supporting commercial activities / generating financial profits) you should be entitled to freely use OSE 11. However we are not not lawers nor Oracle commercial partners so always check with Oracle to see if they allow you to freely use OSE 11 or if they require you to buy a support contract in your context.

As an alternative we can suggest OpenIndiana. OpenIndiana build 148 supports ZFS version 5 and zpool version 28 (encryption is provided in zpool version 31, that version is, to our knowledge, only supported on OSE 11)

Networking considerations

Each of the two wthernet NIC will be connected to two different (linked) Gigabits switches. For the record, the used switches here are TrendNet TEG-S80Dg, directly connected on a 120V plug no external PSU

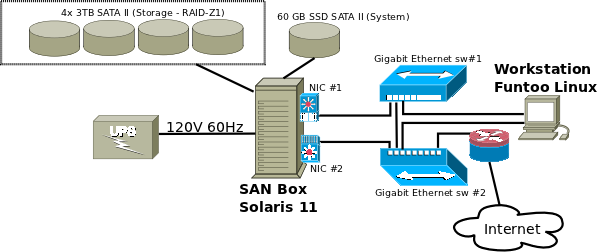

Architectural overview

Preliminary BIOS operations on the SAN Box

Before going on with the installation of Solaris, we have to do two operations:

- Upgrade the BIOS to its latest revision (our NF81 motherboard came with an BIOS revision)

- Setup the BIOS

BIOS Upgrade

At date of writing, JetWay published a newer BIOS revision (A03) for the motherboard, unfortunately the board has no built-in flash utilities accessible at machine startup so only solution is to build a bootable DOS USB Key using Qemu (unetbootin with a FreeDOS failed has been tried but no joy):

Flashing a BIOS is always a risky operation, always connect the computer to a UPS protected power plug and let the flash to complete

- On your Funtoo box:

- Emerge the package app-emulation/qemu

- Download Balder (minimalistic FreeDOS image), at date of writing balder10.img is the only version available

- Plug your USB key, note how the kernel recognize it (e.g. /dev/sdz) and backup any valuable data on it.

- With the partitiong tool of your choice (cfdisk, fdisk...) create a single partition on your USB Key, change it type to 0xF (Win95 FAT32) and mark it as being bootable

- Run qmemu and make it boot on the Balder image and make it use your USB has an hard-drive => qemu -boot a -fda balder10.img -hda /dev/sdz (your USB key is seen as drive C: and the Balder image as floppy drive A:)

- In the boot menu shown in the Qemu window select option "3 Start FreeDOS for i386 + FDXMS", after a fraction of second a extremly very well known prompt coming from the antiquity of computer-scienc welcomes you :)

- It is now time to install the boot code on the "hard drive" and copy all file contained in the Balder image. To achieve that execute in sequence sys c: followed by xcopy /E /N a: c:

- Close QEmu and test your key by running Qemu again: qemu /dev/sdz

- Do you see Balder boot menu? Perfect, close Qemu again

- Mount your USB key within the VFS: mount /dev/sdz /mnt/usbkey

- On the Motherboard manufacturer website, download the update archive (in the case of JetWay, get xxxxxxxx.zip not xxxxxxxx_w.zip, that later is for flashing from a Windows instance). In general BIOS update archives comes as a ZIP archive containing not only a BIOS image but also the adequate tools (for MS-DOS ;) to reflash the BIOS EEPROM. This is not the case with the JetWay NF81 but some motherboard models require you to change a jumper position on the motherboard before flashing the BIOS EEPROM (read-only/read-write).

- Extract in the above mount-point the files contained in the BIOS update ZIP archive.

- Now unmount the USB key - DO NOT UNPLUG THE KEY WITHOUT UNMOUNTING IT FIRST YOU WILL GET CORRUPTED FILES ON IT.

- On the SAN Box:

- Plug the USB key on the SAN Box, turn the machine on and go in the BIOS setting to make sure the first boot device is the USB key. For the the JetWay NF81 (more or less UEFI BIOS?): if you see your USB Key twice in the boot devices list with one instance name starting with "UEFI...", make sure this name is selected first else the system won't consider the USB as a bootable device.

- Save your changes and exit from the BIOS setup program, when you USB key is probed the BIOS should boot on it

- In the Balder boot menu, choose again "3 Start FreeDOS for i386 + FDXMS"

- At the FreeDOS C:> prompt, just run the magic command FLASH.BAT (this DOS batch file runs the BIOS flashing with the adequate arguments)

- when the flashing finishes power cycle the machine (in our case, for reason, the flashing utility didn't return at the prompt)

- Go again in the BIOS Settings and load the default settings (highly recommended).

SAN box BIOS setup

Pays attention to the following settings:

- Main:

- Check the date and time it should be set on your local date and time

- SATA Config: No drives will be listed here but the one connected on the 5th SATA port (assuming you have enabled the SATA-IDE combined node, if SATA-IDE combined mode has been disabled no drive will be showed up). The option is a bit misleading, it should have been called "IDE Drives"...

- Advanced:

- CPU Config -> C6 mode: enabled (C6 not shown in powertop?)

- Shutdown temperature: 75C (you can choose a bit lower)

- Chipset - Southbridge

- Onchip SATA chancel: Enabled

- Onchip SATA type: AHCI (mandatory to be able to use hot plug and this also enables the drive Native Command Queuing (NCQ)

- SATA-IDE Combined mode: Disabled (this option affects *only* the 5th SATA port as being an IDE one even is AHCI has been enabled for "Onchip SATA type", undocumented in the manual :-( )

- Boot: set the device priority to the USB DVD-ROM drive first then the 2.5 OCZ SSD drive

Phase 1: Setting up Solaris 11 on the SAN box

Ok this is not especially Funtoo related but the following notes can definitely saves you several hours. The whole process of setting up a Solaris operating system won't be explained because it far beyond the scope of this article but here is some pertinent information for SAN setup thematic. A few points:

- Everything we need (video drivers untested Xorg won't be used) is properly recognized out of the box especially the Ethernet RTL8111E chipset (prtconf -D report the kernel is using a driver for the RTL8168 this is normal, both chipsets are very close so an alias has been put in /etc/driver_aliases).

- 3TB drives are properly recognized and used under Solaris, no need of the HBA adapter provided by Western Digital

- We won't partition the 4 Western Dgigital drives with GPT we will use the directly the whole devices

- According to Wikipedia, AES hardware acceleration of the AMD T56N APU is supported by the Solaris Cryptographic Framework (you won't see it has an hardware crypto provider)

- Gotcha: canadian-french keyboard layout gives an azerty keyboard on the console :(

Activating SSH to work from a remote machine

Solaris 11 Express comes with a text-only CD-ROM [1]. Because machine serves a storage box with no screen connected on it most of time and working from a 80x25 console is not very comfortable (especially with an incorrect keyboard mapping), the best starting point is to enable the network and activate SSH.

DNS servers configuration

The SAN box has 2 NICs which will be used as 2 different active interfaces with no fail-over/link aggregation configuration. The DNS servers have been configure to resolve the SAN box in a round robin manner. Depending on the version of ISC BIND you use:

- ISC BIND version 8:

- Set the multiple-cnames option to yes (else you will get an error).

- Define your direct resolution like below:

(...) sanbox-if0 IN A 192.168.1.100 sanbox-if1 IN A 192.168.1.101 (...) sanbox IN CNAME sanbox-if0 sanbox IN CNAME sanbox-if1

- ISC BIND version 9

- You MUST use multiple A resource records

- Define your direct resolution like below:

(...) sanbox-if0 IN A 192.168.1.100 sanbox-if1 IN A 192.168.1.101 sanbox IN A 192.168.1.100 sanbox IN A 192.168.1.101 (...)

Network configuration

Let's configure the network on the SAN Box. If you are used to Linux, you will see that the process in the Solaris world the process is a bit similar with some substential differences however. First, let's see what are the NICs recognized by the Solaris kernel at system startup:

# dladm show-phys LINK MEDIA STATE SPEED DUPLEX DEVICE rge0 Ethernet unknown 0 unknown rge0 rge1 Ethernet unknown 0 unknown rge1

Both Ethernet interfaces are connected to a switch (have their link LEDs lit) but they still need to be "plumbed" (plumbing is persistent between reboots):

# ifconfig rge0 plumb # ifconfig rge1 plumb # dladm show-phys LINK MEDIA STATE SPEED DUPLEX DEVICE rge0 Ethernet up 1000 full rge0 rge1 Ethernet up 1000 full rge1

Once plumbed, both interfaces will also be listed when doing an ifconfig:

# ifconfig -a

lo0: flags=2001000849<UP,LOOPBACK,RUNNING,MULTICAST,IPv4,VIRTUAL> mtu 8232 index 1

inet 127.0.0.1 netmask ff000000

rge0: flags=1000843<BROADCAST,RUNNING,MULTICAST,IPv4> mtu 1500 index 2

inet 0.0.0.0 netmask 0

ether 0:30:18:a1:73:c6

rge1: flags=1000842<BROADCAST,RUNNING,MULTICAST,IPv4> mtu 1500 index 4

inet 0.0.0.0 netmask 0

ether 0:30:18:a1:73:c6

Time to assign a static IP address to both interfaces (we won't use link aggregation or fail-over configuration in that case):

# ifconfig rge0 192.168.1.100/24 up # ifconfig rge1 192.168.1.101/24 up

Notice the up the end, if you forget it Solaris will assign an IP address to the NIC but will consider it being inactive (down). Checking again:

# ifconfig -a

lo0: flags=2001000849<UP,LOOPBACK,RUNNING,MULTICAST,IPv4,VIRTUAL> mtu 8232 index 1

inet 127.0.0.1 netmask ff000000

rge0: flags=1000843<UP,BROADCAST,RUNNING,MULTICAST,IPv4> mtu 1500 index 2

inet 192.168.1.100 netmask ffffff00 broadcast 192.168.1.255

ether 0:30:18:a1:73:c6

rge1: flags=1000842<BROADCAST,RUNNING,MULTICAST,IPv4> mtu 1500 index 4

inet 0.0.0.0 netmask 0

ether 0:30:18:a1:73:c6

To see rge0 and rge1 automatically brough up at system startup, we need to defile 2 files names /etc/hostname.rgeX containing the NIC IP address:

# echo "192.168.1.100/24" > /etc/hostname.rge0 # echo "192.168.1.100/24" > /etc/hostname.rge1

Now let's add a default route and make it persistent across reboots:

# echo 192.168.1.1 > /etc/defaultrouter # route add `cat /etc/defaultrouter` # ping 192.168.1.1 192.168.1.1 is alive

Once everything is in place, reboot the SAN box and see if both NICs are up with an IP address assigned and with a default route:

# ifconfig -a

lo0: flags=2001000849<UP,LOOPBACK,RUNNING,MULTICAST,IPv4,VIRTUAL> mtu 8232 index 1

inet 127.0.0.1 netmask ff000000

rge0: flags=1000843<UP,BROADCAST,RUNNING,MULTICAST,IPv4> mtu 1500 index 2

inet 192.168.1.100 netmask ffffff00 broadcast 192.168.1.255

ether 0:30:18:a1:73:c6

rge1: flags=1000843<UP,BROADCAST,RUNNING,MULTICAST,IPv4> mtu 1500 index 4

inet 192.168.1.101 netmask ffffff00 broadcast 192.168.1.255

ether 0:30:18:a1:73:c6

(...)

# netstat -rn

Routing Table: IPv4

Destination Gateway Flags Ref Use Interface

-------------------- -------------------- ----- ----- ---------- ---------

default 192.168.1.1 UG 2 682

127.0.0.1 127.0.0.1 UH 2 172 lo0

192.168.1.0 192.168.1.101 U 3 127425 rge1

192.168.1.0 192.168.1.100 U 3 1260 rge0

A final step for this paragraph: name resolution configuration. The environment used here has DNS servers (primary 192.168.1.2, secondary 192.168.1.1) so /etc/resolv.conf looks like this:

search mytestdomain.lan nameserver 192.168.1.2 nameserver 192.168.1.1

By default the hosts resolution is configured (/etc/nsswitch.conf) to use the DNS servers first then the file /etc/hosts so make sure that /etc/nsswitch.conf has the following line:

hosts: dns

Make sure name resolution is in order:

# nslookup sanbox-if1 Server: 192.168.1.2 Address: 192.168.1.2#53 Name: sanbox-if1.mytestdomain.lan Address: 192.168.1.100 # nslookup sanbox-if0 Server: 192.168.1.2 Address: 192.168.1.2#53 Name: sanbox-if1.mytestdomain.lan Address: 192.168.1.101 # nslookup 192.168.1.100 Server: 192.168.1.2 Address: 192.168.1.2#53 101.1.168.192.in-addr.arpa name = sanbox-if0.mytestdomain.lan. # nslookup 192.168.1.101 Server: 192.168.1.2 Address: 192.168.1.2#53 101.1.168.192.in-addr.arpa name = sanbox-if1.mytestdomain.lan.

Does round-robin canonical name resolution work?

# nslookup sanbox Server: 192.168.1.2 Address: 192.168.1.2#53 Name: sanbox.mytestdomain.lan Address: 192.168.1.100 Name: sanbox.mytestdomain.lan Address: 192.168.1.101 # nslookup sanbox Server: 192.168.1.2 Address: 192.168.1.2#53 Name: sanbox.mytestdomain.lan Address: 192.168.1.101 Name: sanbox.mytestdomain.lan Address: 192.168.1.100

Perfect! Two different requests gives a list in a different order. Now reboot the box and check everything is in order:

- Both network interfaces automatically brought up with an IP address assigned

- The default route is set to the right gateway

- Name resolution is setup (see /etc/resolv.conf)

Bringing up SSH

This is very straightforward:

- Check if the service is disabled (on a fresh install it is):

# svcs -a | grep ssh disabled 8:07:01 svc:/network/ssh:default

- Edit /etc/sshd/ssd_config (nano and vi are included by Solaris) to match your preferences. Do not bother with remote root access, RBAC security policcies of Solaris does not allow it and you must logon with a normal user account prior gaining root privileges with su -.

- Now enable the SSH server and check its status

# svcadm enable ssh # svcs -a | grep ssh online 8:07:01 svc:/network/ssh:default

If you get maintenance instead of online it means the service encountered an error somewhere, usually it is due to a typo in the the configuration file. You can get some information with svcs -x ssh

SSH is now ready and listen for connections (here coming from any IPv4/IPv6 address hence the two lines):

# netstat -an | grep ssh# netstat -an | grep "22"

*.22 *.* 0 0 128000 0 LISTEN

*.22 *.* 0 0 128000 0 LISTEN

Enabling some extra virtual terminals

Although the machine is very likely to be used via some SSH remote connections, it can however be useful to have multiple virtual consoles enabled on the box (just like in Linux you will be able to switch in between using the magic key combination Ctrl-Alt-F<VT number>). By default Solaris gives you a single console but here is how to enable some extra virtual terminals:

- Enable the service vtdaemon and make sure it runs:

# scadm enable vtdaemon # svcs -l vtdaemon fmri svc:/system/vtdaemon:default name vtdaemon for virtual console secure switch enabled true state online next_state none state_time June 19, 2011 09:21:53 AM EDT logfile /var/svc/log/system-vtdaemon:default.log restarter svc:/system/svc/restarter:default contract_id 97 dependency require_all/none svc:/system/console-login:default (online)

Another form of service checking (long form) has been used here just for the sake of demonstrating.

- Now enable five more virtual consoles (vt2 to vt7) and enable hotkeys property of vtdaemon else you won't be able to switch between the virtual consoles:

# for i in 2 3 4 5 6 ; do svcadm enable console-login:vt$i; done # svccfg -s vtdaemon setprop options/hotkeys=true

If you want to disable terminal the screen auto-locking capability of your newly added virtual terminals :

# svccfg -s vtdaemon setprop options/secure=false

Refresh the configuration parameters of vtdaemon (mandatory!) and restart it:

# svcadm refresh vtdaemon # svcadm restart vtdaemon

The service vtdaemon and the virtual consoles are now up and running:

# svcs -a | grep "vt" online 9:43:14 svc:/system/vtdaemon:default online 9:43:14 svc:/system/console-login:vt3 online 9:43:14 svc:/system/console-login:vt2 online 9:43:14 svc:/system/console-login:vt5 online 9:43:14 svc:/system/console-login:vt6 online 9:43:14 svc:/system/console-login:vt4

console-login:vtX won't be enabled and online if vtdaemon itself is not enabled and online.

Now try to switch between virtual terminals with Ctrl-Alt-F2 to Ctrl-Alt-F6 (virtual terminals do auto-lock when you switch in between if you did not set vtdaemon property options/secure to false). You can return to the console with Ctrl-Alt-F2.

Be a Watt-saver!

When idle with all of the disk spun up, the SAN Box consumes near 40W at the plug when idle and near 60W when dealing with a lot of I/O activities. 40W may not seem a big figure as it represents a big 1kWh/day and 365kWh/year (for your wallet at a rate of 10¢/kWh, an expense of $36.50/year). Not a big deal but preserving every bit of natural resources for Earth sustainability is welcomed and the San Box can easily do its part, especially when you are out of your house for several hours or days :-) Solaris has device power management features and they are easy to configure:

Enforcing power management is mandatory on many non Oracle/Sun/Fujitsu machines because Solaris will fail to detect the machine device management capabilities, hence leaving this feature disabled. Of course this has the consequence of not having the the drives being automatically spun down by Solaris when not solicited for a couple of minutes.

- First run the format command (results can differ in your case):

# format

Searching for disks...done

AVAILABLE DISK SELECTIONS:

0. c7t0d0 <ATA-WDC WD30EZRS-00J-0A80-2.73TB>

/pci@0,0/pci1002,4393@11/disk@0,0

1. c7t1d0 <ATA-WDC WD30EZRS-00J-0A80-2.73TB>

/pci@0,0/pci1002,4393@11/disk@1,0

2. c7t2d0 <ATA-WDC WD30EZRS-00J-0A80-2.73TB>

/pci@0,0/pci1002,4393@11/disk@2,0

3. c7t3d0 <ATA-WDC WD30EZRS-00J-0A80-2.73TB>

/pci@0,0/pci1002,4393@11/disk@3,0

4. c7t4d0 <ATA -OCZ-AGILITY2 -1.32 cyl 3914 alt 2 hd 255 sec 63>

/pci@0,0/pci1002,4393@11/disk@4,0

Specify disk (enter its number):

Note the disks physical pathes (here pci@0,0/pci1002,4393@11/disk@0,0 to pci@0,0/pci1002,4393@11/disk@3,0 are of interest) and exit by pressing Ctrl-C.

- Second, edit the file /etc/power.conf and look for the following line:

autopm auto

- Change it for:

autopm enable

- Third at the end of the same file, add one device-thresholds line per device storage you want to bring down (device-thresholds needs the physical path of the device) followed by the delay before reaching the device standby mode. In our case we want to wait 10 minutes so it gives:

device-thresholds /pci@0,0/pci1002,4393@11/disk@0,0 10m device-thresholds /pci@0,0/pci1002,4393@11/disk@1,0 10m device-thresholds /pci@0,0/pci1002,4393@11/disk@2,0 10m device-thresholds /pci@0,0/pci1002,4393@11/disk@3,0 10m

- Third run pmconfig (no arguments) to make this new configuration active

This (theoretically) puts the 4 hard drives in standby mode after 10 minutes of inactivity. It is possible that your drives come with preconfigured values preventing them from being spun down before a factory-set delay or being spun at all (experiments with our WD Caviar Green drives are put in standby with a factory-set delay of ~8 minutes).

With the hardware used to build the SAN Box, nothing more than 27W is drained from the power plug when all of the drives are in standby and when the CPU is idle (32% more energy savings compared to the original 40W) :-).

Phase 2: Creating the SAN storage pool on the SAN box

At date of writing (June 2011) Solaris does not support adding additional hard-drives to an existing to a Z-pool created as a RAID-Z array to increase its size. It is however possible to increase the storage space by replacing of all of the drives composing a RAID-Z array one after another (wait for the completion of the re-silvering process after each drive replacement).

Enabling the hotplug service

The very first step of this section is not creating the Z-Pool but, instead checking if hotplug service is enabled and online as the computer case we use has hot-pluggable SATA bays:

# svcs -l hotplug fmri svc:/system/hotplug:default name hotplug daemon enabled true state online next_state none state_time June 19, 2011 10:22:22 AM EDT logfile /var/svc/log/system-hotplug:default.log restarter svc:/system/svc/restarter:default contract_id 61 dependency require_all/none svc:/system/device/local (online) dependency require_all/none svc:/system/filesystem/local (online)

In the case the service is shown as disabled, just enable it by doing svcadm enable hotplug and check if it is stated as being online after that. When this service is online, the Solaris kernel is aware whenever a disk is be plugged-in or removed from the computer frame (your SATA bays must support hot plugging, this is the case here).

Creating the SAN storage pool (RAID-Z1)

RAID-Z pools can be created in two flavors:

- RAID-Z1 (~RAID-5): supports the failure of only one disk at the price of "sacrificing" the availability one disk for storage (4x 3 Tb gives 9 Tb of storage)

- RAID-Z2 (~RAID-6): supports the failure of up to two disks at the price of "sacrificing" the availability two disks for storage (4x 3 Tb gives 6 Tb of storage) and requiring more computational power from the CPU.

A good trade-off here between computational power/storage (main priority)/reliability is to use RAID-Z1.

The first step is to identify the logical device name of the drives that will be used for our RAID-Z1 storage pool. If you have configured the power-management of the SAN box (see above paragraphs), you should have noticed that the format command returns something (e.g. c7t0d0) just before the <ATA-...> drive identification string. This is the logical device name we will need to create the storage pool!

0. c7t0d0 <ATA-WDC WD30EZRS-00J-0A80-2.73TB>

/pci@0,0/pci1002,4393@11/disk@0,0

1. c7t1d0 <ATA-WDC WD30EZRS-00J-0A80-2.73TB>

/pci@0,0/pci1002,4393@11/disk@1,0

2. c7t2d0 <ATA-WDC WD30EZRS-00J-0A80-2.73TB>

/pci@0,0/pci1002,4393@11/disk@2,0

3. c7t3d0 <ATA-WDC WD30EZRS-00J-0A80-2.73TB>

/pci@0,0/pci1002,4393@11/disk@3,0

- As each of the SATA drives are connected on their own SATA bus, Solaris see them as 4 different "SCSI targets" plugged in the same "SCSI HBA" (one LUN per target hence the d0

- the zpool command will automatically create on each of the given disks a GPT label covering the whole disk for you. GPT labels overcome the 2.19TB limit and are redundant structures (one copy is put at the beginning of the disk, one exact copy at the end of the disk).

Creating the storage pool (named san here) as a RAID-Z1 array is simply achieved with the following incantation (of course adapt to your case... here we use c7t0d0, c7t1d0, c7t2d0 and c7t3d0):

# zpool create san raidz c7t0d0 c7t1d0 c7t2d0 c7t3d0

Now check status and say hello to your brand new SAN storage pool (notice it has been mounted with its name directly under the VFS root):

# zpool status san

pool: san

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

san ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

c7t0d0 ONLINE 0 0 0

c7t1d0 ONLINE 0 0 0

c7t2d0 ONLINE 0 0 0

c7t3d0 ONLINE 0 0 0

errors: No known data errors

# # df -h

Filesystem Size Used Avail Use% Mounted on

(...)

san 8.1T 45K 8.1T 1% /san

The above just says: everything is in order, the pool is fully functional (not in a DEGRADED state).

Torturing the SAN pool

Not mandatory but at this point to do will do some (evils) tests.

Removal of one disk

What would happen if a disk should die? Too see, simply remove one of the disks from its bay without doing anything else :-). The Solaris kernel should see a drive has just been removed:

# dmesg (...) Jun 19 12:03:25 xxxxx genunix: [ID 408114 kern.info] /pci@0,0/pci1002,4393@11/disk@2,0 (sd3) removed

Now check the array status:

# zpool status san

pool: san

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

san ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

c7t0d0 ONLINE 0 0 0

c7t1d0 ONLINE 0 0 0

c7t2d0 ONLINE 0 0 0

c7t3d0 ONLINE 0 0 0

errors: No known data errors

(...)

Nothing?... This is absolutely normal if no write operations occurred on the pool from the time you have removed the hard drive. If you create some activity you will see the pool switch in degraded state:

# dd if=/dev/random of=/san/test.dd count=1000

# zpool status san

pool: san

state: DEGRADED

status: One or more devices has been removed by the administrator.

Sufficient replicas exist for the pool to continue functioning in a

degraded state.

action: Online the device using 'zpool online' or replace the device with

'zpool replace'.

scan: none requested

config:

NAME STATE READ WRITE CKSUM

san DEGRADED 0 0 0

raidz1-0 DEGRADED 0 0 0

c7t0d0 ONLINE 0 0 0

c7t1d0 ONLINE 0 0 0

c7t2d0 REMOVED 0 0 0

c7t3d0 ONLINE 0 0 0

errors: No known data errors

Returning to normal operation

Now put back the drive in its bay and see what happens (wait a couple of seconds, the time for the drive for being spun up):

# dmesg (...) Jun 19 12:23:12 xxxxxxx SATA device detected at port 2 Jun 19 12:23:12 xxxxxxx sata: [ID 663010 kern.info] /pci@0,0/pci1002,4393@11 : Jun 19 12:23:12 xxxxxxx sata: [ID 761595 kern.info] SATA disk device at port 2 Jun 19 12:23:12 xxxxxxx sata: [ID 846691 kern.info] model WDC WD30EZRS-00J99B0 Jun 19 12:23:12 xxxxxxx sata: [ID 693010 kern.info] firmware 80.00A80 Jun 19 12:23:12 xxxxxxx sata: [ID 163988 kern.info] serial number WD-************* Jun 19 12:23:12 xxxxxxx sata: [ID 594940 kern.info] supported features: Jun 19 12:23:12 xxxxxxx sata: [ID 981177 kern.info] 48-bit LBA, DMA, Native Command Queueing, SMART, SMART self-test Jun 19 12:23:12 xxxxxxx sata: [ID 643337 kern.info] SATA Gen2 signaling speed (3.0Gbps) Jun 19 12:23:12 xxxxxxx sata: [ID 349649 kern.info] Supported queue depth 32 Jun 19 12:23:12 xxxxxxx sata: [ID 349649 kern.info] capacity = 5860533168 sectors

Solaris now sees the drive again! What about the storage pool?

# zpool status san

pool: san

state: DEGRADED

status: One or more devices has been removed by the administrator.

Sufficient replicas exist for the pool to continue functioning in a

degraded state.

action: Online the device using 'zpool online' or replace the device with

'zpool replace'.

scan: none requested

config:

NAME STATE READ WRITE CKSUM

san DEGRADED 0 0 0

raidz1-0 DEGRADED 0 0 0

c7t0d0 ONLINE 0 0 0

c7t1d0 ONLINE 0 0 0

c7t2d0 REMOVED 0 0 0

c7t3d0 ONLINE 0 0 0

errors: No known data errors

Still in degraded state, correct. ZFS does not take any initiative when a new drive is connected and it is up to you to handle the operations. Let's start by asking the list of the SATA port on the motherboard (we can safely ignore sata0/5 in our case, our motherboard chipset has support for 6 SATA ports but only 5 of them have physical connectors on the motherboard PCB):

# cfgadm -a sata Ap_Id Type Receptacle Occupant Condition sata0/0::dsk/c7t0d0 disk connected configured ok sata0/1::dsk/c7t1d0 disk connected configured ok sata0/2 disk connected unconfigured unknown sata0/3::dsk/c7t3d0 disk connected configured ok sata0/4::dsk/c7t4d0 disk connected configured ok sata0/5 sata-port empty unconfigured ok

To return to normal state, just "configure" the drive this is accomplished by:

# cfgadm -c configure sata0/2 # cfgadm -a sata Ap_Id Type Receptacle Occupant Condition sata0/0::dsk/c7t0d0 disk connected configured ok sata0/1::dsk/c7t1d0 disk connected configured ok sata0/2::dsk/c7t2d0 disk connected configured ok sata0/3::dsk/c7t3d0 disk connected configured ok sata0/4::dsk/c7t4d0 disk connected configured ok

What happens on the storage pool side?

# zpool status san

pool: san

state: ONLINE

scan: resilvered 24.6M in 0h0m with 0 errors on Sun Jun 19 12:45:30 2011

config:

NAME STATE READ WRITE CKSUM

san ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

c7t0d0 ONLINE 0 0 0

c7t1d0 ONLINE 0 0 0

c7t2d0 ONLINE 0 0 0

c7t3d0 ONLINE 0 0 0

errors: No known data errors

Fully operational again! Note that the system took the initiative of re-silvering the storage pool for you :-) This is very quick here because the pool is empty but it can take several minutes or hours to complete. Here we just pulled back the drive, ZFS is intelligent enough scan what the drive contain and know it has to attach the drive the "san" storage pool. In the case we replace the drive with a brand new blank one, we would have explicitly tell to replace the drive (Solaris will resilver the array but you will still see it in a degraded state). Assuming the old and new device are assigned to the same drive bay (here giving c7t2d0):

# zpool replace c7t2d0 c7t2d0

Removal of two disks

What would happen if two disks dies? Do not do this but for the sake of demonstration we have removed two disks from their bays. When the second drive is removed, the following appears on the console (also visible with dmesg)

SUNW-MSG-ID: ZFS-8000-HC, TYPE: Error, VER: 1, SEVERITY: Major

EVENT-TIME: Sun Jun 19 13:19:44 EDT 2011

PLATFORM: To-be-filled-by-O.E.M., CSN: To-be-filled-by-O.E.M., HOSTNAME: uranium

zfs-diagnosis, REV: 1.0

EVENT-ID: 139ee67e-e294-6709-9225-bff20ea7418e

DESC: The ZFS pool has experienced currently unrecoverable I/O

failures. Refer to http://sun.com/msg/ZFS-8000-HC for more information.

AUTO-RESPONSE: No automated response will be taken.

IMPACT: Read and write I/Os cannot be serviced.

REC-ACTION: Make sure the affected devices are connected, then run

'zpool clear'.

Any current I/O operation is suspended (and the processes process waiting after them are put in uninterruptible sleep state).... Querying the pool status gives:

# zpool status san

pool: san

state: UNAVAIL

status: One or more devices are faulted in response to IO failures.

action: Make sure the affected devices are connected, then run 'zpool clear'.

see: http://www.sun.com/msg/ZFS-8000-HC

scan: resilvered 24.6M in 0h0m with 0 errors on Sun Jun 19 12:45:30 2011

config:

NAME STATE READ WRITE CKSUM

san UNAVAIL 0 0 0 insufficient replicas

raidz1-0 UNAVAIL 0 0 0 insufficient replicas

c7t0d0 ONLINE 0 0 0

c7t1d0 REMOVED 0 0 0

c7t2d0 REMOVED 0 0 0

c7t3d0 ONLINE 0 0 0

errors: 2 data errors, use '-v' for a list

Creating zvols

How we will use the storage pool? Well, this is not a critical question although it remains important because you can make a zvol grow on the fly (of course this make sens if the filesystem on it has also the capability to grow). For the demonstration we will use the following layout:

- san/os 2Tb - Operating systems local mirror (Several Linux, Solaris and *BSD)

- san/homes - 1Tb - Home directories of the other Linux boxes

- test - 20 Gb - Scrapable test data

To use the pool space in a clever manner, we will use allocation a space allocation policy known as thin provisioning (also known as sparse volume, notice the -s in the commands):

# zfs create -s -V 2.5t san/os # zfs create -s -V 500g san/home-host1 # zfs create -s -V 20g san/test # zfs list (...) san 3.10T 4.91T 44.9K /san san/home-host1 516G 5.41T 80.8K - san/os 2.58T 7.48T 23.9K - san/test 20.6G 4.93T 23.9K -

Notice the last column in the last example: it shows no path (only a dash!) And doing ls -la in our SAN pool reports:

# ls -la /san total 4 drwxr-xr-x 2 root root 2 2011-06-19 13:59 . drwxr-xr-x 25 root root 27 2011-06-19 11:33 ..

This is absolutely correct, zvols are a bit different of standard datasets. If you sneak what lies under /dev you will notice a pseudo-directory named zvol. Let's see what it contains:

# ls -l /dev/zvol total 0 drwxr-xr-x 3 root sys 0 2011-06-19 10:22 dsk drwxr-xr-x 4 root sys 0 2011-06-19 10:34 rdsk

Ah! Interesting, what lies beneath? Let's take the red pill and dive one level deeper (we choose arbitrarily rdsk, dsk will show similar results):

# ls -l /dev/zvol/rdsk total 0 drwxr-xr-x 6 root sys 0 2011-06-19 10:34 rpool1 drwxr-xr-x 2 root sys 0 2011-06-19 14:04 san

rpool1 correspond to the system pool (your Solaris installation in itself lives in a zpool, so you can do snapshots and rollbacks with it!) and san is our brand new storage pool. What is inside the san directory?

# ls -l /dev/zvol/rdsk/san lrwxrwxrwx 1 root root 0 2011-06-19 14:11 home-host1 -> ../../../..//devices/pseudo/zfs@0:3,raw lrwxrwxrwx 1 root root 0 2011-06-19 14:11 os -> ../../../..//devices/pseudo/zfs@0:4,raw lrwxrwxrwx 1 root root 0 2011-06-19 14:11 test -> ../../../..//devices/pseudo/zfs@0:5,raw

Wow! The three virtual zvols we have created are just seen just as if there were three physical physical disks but indeed they lies in a storage pool created as a RAID-Z1 array. "Just like" really means what it says i.e. you can do everything with them just as you would do with real disks (format won't see them however because if just scans /dev/rdsk and not /dev/zpool/rdsk).

iSCSI will bring the cheerbeery on the sundae!

Phase 3: Bringing a bit of iSCSI magic

Now the real fun begins!

Solaris 11 uses a new COMSTAR implementation which differs from the COMSTAR implementation found in Solaris 10. This new version brings some slight changes like not supporting the iscsishare property on zvols.

iSCSI concepts overview

Before going further, you must know iSCSI a bit and several fundamental concepts (it is not complicated but you need to get a precise idea of it). First, iSCSI is exactly what its name suggest: a protocol to carry SCSI commands over a network. In the TCP/IP world, iSCSI relies on TCP (and not UDP) thus, splitting/retransmission/reordering of iSCSI packet are transparently done by the magic of the TCP/IP stack. Should the network connection break and as being a stateful protocol, TCP will be aware of the situation. So no gambling on data integrity here: iSCSI disks are as reliable as if they were DAS (Directly Attached Storage) peripherals. Of course using TCP does not avoid packet tampering and man-in-the-middle attacks and just like any other protocol relying on TCP.

Altough the iSCSI is a typical client-server architecture, the stakeholders are given the name nodes. A node can act either as a server either as client or can mix the roles (use remote iSCSI disks and provides its own iSCSI disk to others iSCSI nodes)

Because your virtual SCSI cable is nothing more than a network stream, you can do whatever you want starting by applying QoS (Quality of Service) policies to it in your routers, encrypting it or sniff it if you are curious about the details. The drawback for the end-user of an iSCSI disk is the network speed: slow or overloaded networks will make I/O operations from/to iSCSI devices extremely irritating... This is not necessarily an issue for our SAN box used in a home gigagbit LAN with a few machines but it will become an issue if you intend to share your iSCSI disk over the Internet or use remote ISCSI disk over the Internet.

Initiator, target and LUN

It is important to figure out some concepts before going further (if you are used with SCSI, iSCSI will sound familiar). Here are some formal definitions coming from stmfadm(1M):

- Initiator: a device responsible for issuing SCSI I/O commands to a SCSI target and logical unit.

- Target: a device responsible for receiving SCSI I/O commands for a logical unit.

- logical unit number (LUN): A device within a target responsible for executing SCSI I/O commands

The initiator and the target are respectively the client and a server sides: an iSCSI initiator establishes connection to an iSCSI target (located on a remote iSCSI node). A target can contain one or more logical devices which executes the SCSI I/O commands.

Initiator and targets can :

- be either pure software solutions (just like here) or hardware solutions (iSCSI HBA, costs a couple of hundreds of dollars) with dedicated electronic boards to off-load the computers CPUs. In the case of pure software initiator/and targets several open source/commercial solutions exist like Open iSCSI (initiator), Enterprise iSCSI Target (target), COMSTAR (target -- Solaris specific) and several others.

- use authentication mechanisms (CHAP). It is possible to do either one-way authentication (the target authenticates the initiator) or two-way authentication (the initiator and the target mutually authenticate themselves).

To make a little analogy: Imagine you are a king (iSCSI "client" node) who need to establish communication channels with friendly warlord (LUN) in remote castle (iSCSI "server" node):

- You will send a messenger (initiator) who will travel trough dangerous lands (network) for you

- Your messenger will knock at the door D1 (target) of the remote castle (server).

- The guard at the door tries to determine if your messenger has really sent by you (CHAP authentication).

- Once authenticated your messenger who only knows his interlocutor by a nickname (LUN) says "I need to speak to the someone called here the one-eyed". The guard knows that the real name (physical device) of this famous the one-eyed and can make your messenger getting in touch with him.

A bit schematic but things work exactly in that way. Oh something the previous does not illustrate: aside of emulating a remote SCSI disk, some iSCSI implementations have the capability to forward the command stream to a directly attached SCSI storage device in total transparency.

IQN vs EUI

Each initiator and target is given unique identifier, just like a World Wide Name (WWN) in the Fiber Channel world. This identifier adopt three different formats:

- iSCSI Qualified Name (IQN): Described in RFC 3720, an IQN identifier is composed of the following

- Extended-unique identifier (EUI):

- T11 Network Address Authority: Described in RFC 3980, NAA identifiers brings iSCSI identifiers compatible with naming conventions used in Fibre Channel (FC) and Serial Attached SCSI (SAS) storage technologies.

IQN remains probably the most common seen identifier format. An IQN identifier can be up to 223 ASCII characters long and is composed of the following four parts:

- the litteral iqn

- the date the naming authority took the ownership of its internet domain name

- the internet domain (reversed order) of the naming autority

- optional: A double colon ":" prefixing an arbitrary storage designation

For example, all COMSTAR IQN identifiers starts with: iqn.1986-03.com.sun where as open iSCSI IQN starts with iqn.2005-03.org.open-iscsi. Follwing are all valid IQNs:

- iqn.2005-03.org.open-iscsi:d8d1606ef0cb

- iqn.2005-03.org.open-iscsi:storage-01

- iqn.1986-03.com.sun:01:0003ba2d0f67.46e47e5d

In the case of COMSTAR, the optional part of IQN can have two forms:

- :01:<MAC address>.<timestamp>[.<friendly name>] (used by iSCSI initiators)

- :02:<UUID>.<local target name> (used by iSCSI targets)

Where:

- <MAC address>: the 12 lowercase hexadecimal characters composing a 6 bytes MAC address (MAC = EFGHIF --> 45464748494a)

- <timestamp>: the lowercase hexadecimal representation of the number of seconds elapsed since January 1, 1970 at the time the name is created

- <friendly name>: an extension that provides a name that is meant to be meaningful to users.

- <UUID>: a unique identifier consisting of 36 hexadecimal characters in the format xxxxxxxx xxxx xxxx xxxx xxxxxxxxxxxx

- <local target name>: a name used by the target.

Making the SAN box zvols accessible through iSCSI

The following has been tested under Solaris Express 11, various tutorial around gives explanations for Solaris 10. Both versions have important differences (e.g. Solaris 11 has no more support for the shareiscsi property and uses a new COMSTAR implementation).

Installing pre-requisites

The first step is to install the iSCSI COMSTAR packages (they are not present in Solaris 11 Express, you must install them):

# pkg install iscsi/target

Packages to install: 1

Create boot environment: No

Services to restart: 1

DOWNLOAD PKGS FILES XFER (MB)

Completed 1/1 14/14 0.2/0.2

PHASE ACTIONS

Install Phase 48/48

PHASE ITEMS

Package State Update Phase 1/1

Image State Update Phase 2/2

Now enable the iSCSI Target service, if you have a look at its dependencies you will see it depends on the SCSI Target Mode Framework (STMF):

# svcs -l iscsi/target fmri svc:/network/iscsi/target:default name iscsi target enabled false state disabled next_state none state_time June 19, 2011 06:42:56 PM EDT restarter svc:/system/svc/restarter:default dependency require_any/error svc:/milestone/network (online) dependency require_all/none svc:/system/stmf:default (disabled) # svcadm -r enable iscsi/target # svcs -l iscsi/target fmri svc:/network/iscsi/target:default name iscsi target enabled true state online next_state none state_time June 19, 2011 06:56:57 PM EDT logfile /var/svc/log/network-iscsi-target:default.log restarter svc:/system/svc/restarter:default dependency require_any/error svc:/milestone/network (online) dependency require_all/none svc:/system/stmf:default (online)

Do services are online? Good! Notice the -r it just asks "enable all of the dependencies".

Creating the Logical Units (LU)

You have two ways of creating LUs:

- stmfadm (used here, provides more control)

- sdbadm

The very first step of exporting our zvols is to create their correponding LUs. This is accomplished by:

# stmfadm create-lu /dev/zvol/rdsk/san/test Logical unit created: 600144F0200ACB0000004E0505940007 # stmfadm create-lu /dev/zvol/rdsk/san/os Logical unit created: 600144F0200ACB0000004E05059A0008 # stmfadm create-lu /dev/zvol/rdsk/san/homes Logical unit created: 600144F0200ACB0000004E0505A40009

Now just for the sake of demonstration, we will control with two different commands:

# sbdadm list-lu

Found 3 LU(s)

GUID DATA SIZE SOURCE

-------------------------------- ------------------- ----------------

600144f0200acb0000004e0505940007 2748779069440 /dev/zvol/rdsk/san/os

600144f0200acb0000004e05059a0008 536870912000 /dev/zvol/rdsk/san/home-host1

600144f0200acb0000004e0505a40009 21474836480 /dev/zvol/rdsk/san/test

# stmfadm list-lu

LU Name: 600144F0200ACB0000004E0505940007

LU Name: 600144F0200ACB0000004E05059A0008

LU Name: 600144F0200ACB0000004E0505A40009

# stmfadm list-lu -v

LU Name: 600144F0200ACB0000004E0505940007

Operational Status: Online

Provider Name : sbd

Alias : /dev/zvol/rdsk/san/os

View Entry Count : 1

Data File : /dev/zvol/rdsk/san/os

Meta File : not set

Size : 2748779069440

Block Size : 512

Management URL : not set

Vendor ID : SUN

Product ID : COMSTAR

Serial Num : not set

Write Protect : Disabled

Writeback Cache : Enabled

Access State : Active

LU Name: 600144F0200ACB0000004E05059A0008

Operational Status: Online

Provider Name : sbd

Alias : /dev/zvol/rdsk/san/home-host1

View Entry Count : 1

Data File : /dev/zvol/rdsk/san/home-host1

Meta File : not set

Size : 536870912000

Block Size : 512

Management URL : not set

Vendor ID : SUN

Product ID : COMSTAR

Serial Num : not set

Write Protect : Disabled

Writeback Cache : Enabled

Access State : Active

LU Name: 600144F0200ACB0000004E0505A40009

Operational Status: Online

Provider Name : sbd

Alias : /dev/zvol/rdsk/san/test

View Entry Count : 1

Data File : /dev/zvol/rdsk/san/test

Meta File : not set

Size : 21474836480

Block Size : 512

Management URL : not set

Vendor ID : SUN

Product ID : COMSTAR

Serial Num : not set

Write Protect : Disabled

Writeback Cache : Enabled

Access State : Active

You can change some properties like the alias name if you wish

# stmfadm modify-lu -p alias=operating-systems 600144F0200ACB0000004E0505940007

# stmfadm list-lu -v 600144F0200ACB0000004E0505940007

LU Name: 600144F0200ACB0000004E0505940007

Operational Status: Online

Provider Name : sbd

Alias : operating-systems

View Entry Count : 0

Data File : /dev/zvol/rdsk/san/os

Meta File : not set

Size : 2748779069440

Block Size : 512

Management URL : not set

Vendor ID : SUN

Product ID : COMSTAR

Serial Num : not set

Write Protect : Disabled

Writeback Cache : Enabled

Access State : Active

GUID is the key you will use to manipulate the LUs (if you want to delete one for example).

Mapping concepts

To be able to be seen by iSCSI initators we must do an operation called "mapping". To map our brand new LUs we can adopt two strategies:

- Make a LU visible to ALL iSCSI initiators an EVERY port (simple to setup but poor in terms of security)

- Make a LU visible to certain iSCSI initiators via certain ports (selective mapping)

Under COMSTAR, a LU mapping is done via something called a view. A view is a table consisting of several view entries each one of those entries consisting in the association of a unique {target group (tg), initiator group (ig), Logical Unit Number (LUN)} triplet to a Logical Unit (of course, a single LU can be used by several triplets). Solaris SCSI target mode framework command line utilities are smart enough to detect and refuse duplicate {tg,ig,lun} entries, so you worry about them. The terminology used in the COMSTAR manual pages is a bit confusing because it uses "host group" instead of "initiator group" but one and the other are the same if you read stmfadm(1M) carefully.

Is target group a set of several targets and host (initiator) group a set of initiators? Yes absolutely with the following nuance: an initiator can not be a member of two hosts groups. The LUN is just an arbitrary ordinal reference to LU.

How the view will work? let's say we have a view defined like this (this is a technically not exact because we use targets and initiators and not groups of them):

| Target | Initiator | LUN | GUID of Logical unit |

|---|---|---|---|

| iqn.2015-01.com.myiscsi.server01:storageC | iqn.2015-01.com.myorg.host04 | 13 | 600144F0200ACB0000004E05059A0008 |

| iqn.2015-01.com.myiscsi.server01:storageC | iqn.2015-01.com.myorg.host04 | 17 | 600144F0200ACB0000004E0505940007 |

| iqn.2015-01.com.myiscsi.server03:storageA | iqn.2015-01.com.myorg.host01 | 12 | 600144F0200ACB0000004E05059A0008 |

Internally, this iSCSI server knows what are the real devices (or zvols) hidden behind 600144F0200ACB0000004E0505940007 and 600144F0200ACB0000004E05059A0008 (see the previous paragraph).

Suppose the initiator iqn.2015-01.com.myorg.host04 establishes a connection the target iqn.2015-01.com.myiscsi.server01:storageC, it will be presented 2 LUNs (13 and 17). On the iSCSI client machine each one of those will appear as distinct SCSI drives (Linux would show them as for example /dev/sdg and /dev/sdh where as Solaris would present their logical path as /dev/c4t1d0 and /dev/c4t2d0). However the same initiator connecting to target iqn.2015-01.com.myiscsi.server03:storageA will have no LUNs in its line of sight.

Setting up the LU mapping

We start by creating a target, this is simply accomplished by:

# itadm create-target

Target iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603 successfully created

# stmfadm list-target -v

Target: iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603

Operational Status: Online

Provider Name : iscsit

Alias : -

Protocol : iSCSI

Sessions : 0

The trailing UUIDs will be different for you

Second, we must create the target group (view entries are composed of targets groups and initiators groups) arbitrarily named sbtg-01:

# stmfadm create-tg sbtg-01

Third, we add the target to the brand new target group:

# stmfadm add-tg-member -g sbtg-01 iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603 stmfadm: STMF target must be offline

ooops.... To be added a target must be put offline first:

# stmfadm offline-target iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603

# stmfadm add-tg-member -g sbtg-01 iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603

# stmfadm online-target iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603

# stmfadm list-tg -v

Target Group: sbtg-01

Member: iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603

Now the turn of the initiator group (aka the host group, terminology is a bit confusing). To make thins simple we will use two initiators:

- One located on the SAN Box it self

- One located on a remote Funtoo Linux box

The first initiator is already present on the SAN box because it has been automatically created at the iSCSI packages installation. To see it:

# iscsiadm list initiator-node

Initiator node name: iqn.1986-03.com.sun:01:809a71be02ff.4df93a1e

Initiator node alias: uranium

Login Parameters (Default/Configured):

Header Digest: NONE/-

Data Digest: NONE/-

Authentication Type: NONE

RADIUS Server: NONE

RADIUS Access: disabled

Tunable Parameters (Default/Configured):

Session Login Response Time: 60/-

Maximum Connection Retry Time: 180/-

Login Retry Time Interval: 60/-

Configured Sessions: 1

It is possible to create additional initiators on Solaris with itadm create-initiator

The second initiator (Open iSCSI is used, location can differ in your case if you use something else) IQN can be found on the Funtoo box in the file /etc/iscsi/initiatorname.iscsi:

# cat /etc/iscsi/initiatorname.iscsi | grep -e "^InitiatorName" InitiatorName=iqn.2011-06.lan.mydomain.worsktation01:openiscsi-a902bcc1d45e4795580c06b1d66b2eaf

To create a group encompassing both initiators (again the name sbhg-01 is arbitrary):

# stmfadm create-hg sbhg-01

# stmfadm add-hg-member -g sbhg-01 iqn.1986-03.com.sun:01:809a71be02ff.4df93a1e iqn.2011-06.lan.mydomain.worsktation01:openiscsi-a902bcc1d45e4795580c06b1d66b2eaf

# stmfadm list-hg -v

Host Group: sbhg-01

Member: iqn.1986-03.com.sun:01:809a71be02ff.4df93a1e

Member: iqn.2011-06.lan.mydomain.worsktation01:openiscsi-a902bcc1d45e4795580c06b1d66b2eaf

In this simple case we have only one initiator group and one target groups so we will show all of our LU trough it:

# stmfadm add-view -n 10 -t sbtg-01 -h sbhg-01 600144F0200ACB0000004E0505940007 # stmfadm add-view -n 11 -t sbtg-01 -h sbhg-01 600144F0200ACB0000004E05059A0008 # stmfadm add-view -n 12 -t sbtg-01 -h sbhg-01 600144F0200ACB0000004E0505A40009

- If -n would not specified, stmfadm will automatically assign a LU number (LUN).

- Here again, 10 11 and 12 are arbitrary numbers (we didn't started with 0 just for the sake of the demonstration )

Checking on one LU gives:

# stmfadm list-view -l 600144F0200ACB0000004E0505940007

View Entry: 0

Host group : sbhg-01

Target group : sbtg-01

LUN : 10

It is absolutely normal to get single result here because a single entry concerning that LU has been created. If the LU was referenced in another {tg,ig,lun} triplet you should have seen twice.

Good news everything is in order! The bad one: we have not finished (yet) :-)

Restricting the listening interface of a target (portals and portal groups)

As you may have noticed in the previous paragraph, once created a target is listening for incoming connection on ALL available interfaces and this is not always suitable. Imagine you have a server 1 Gbits/s NIC and 10 Gbit/s NIC, you may want to bind certain targets to your 1 Gbit/s NIC and the others to your 10 Gbit/s. Is it possible to gain control over the NIC the target bnids on? The answer is:YES!

This is accomplished via target portal groups. The mechanic is very similar to what was done before: we create a target portal group (named tpg-localhost here)

# itadm create-tpg tpg-localhost 127.0.0.1:3625

# itadm list-tpg -v

TARGET PORTAL GROUP PORTAL COUNT

tpg-localhost 1

portals: 127.0.0.1:3625

# itadm modify-target -t tpg-localhost iqn.1986-03.com.sun:02:a02da0f0-195f-c9ea-ce9a-82ec96fa36cb

# itadm list-target -v iqn.1986-03.com.sun:02:a02da0f0-195f-c9ea-ce9a-82ec96fa36cb

TARGET NAME STATE SESSIONS

iqn.1986-03.com.sun:02:a02da0f0-195f-c9ea-ce9a-82ec96fa36cb online 0

alias: -

auth: none (defaults)

targetchapuser: -

targetchapsecret: unset

tpg-tags: tpg-localhost = 2

Notice what lies in the field tpg-tags...

Testing from the SAN Box

Every bits are in place, now it is time to do some tests. Before an initiator exchange I/O command with a LUN localized in a target it must know about what the given target contents. This process is known as discovering.

- Static discovery: this one is not really a discovery mechanism it just a manual binding of an iSCSI target to a particular NIC (IP Address+TCP port)

- SendTargets (dynamic mechanism): SendTargets is a simple iSCSI discovery protocol integrated to the iSCSI specification (see appendix D of RFC 3720).

- Internet Storage Name Service - iSNS(dynamic mechanism): Defined in RFC 4171, iSNS is bit more sophisticated than SendTarget (the protocol can handle state modification of an iSCSI node such as when a tagrgets becomes offline for example). Quoting RFC 4171, "iSNS facilitates a seamless integration of IP and Fibre Channel networks due to its ability to emulate Fibre Channel fabric services and to manage both iSCSI and Fibre Channel devices."

In a first move, we will use the static discovery configuration to test the zvols accessibility through a loopback connection from the SAN box to itself. First we enable the static discovery:

# iscsiadm modify discovery --static enable

# iscsiadm list discovery

Discovery:

Static: enabled

Send Targets: disabled

iSNS: disabled

Second, we manually trace a path to the "remote" iSCSI target located on the SAN box itself:

# iscsiadm add static-config iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603,127.0.0.1

A bit silent at first glance but let's be curious a bit and check what the kernel said at the exact moment the static discovery configuration has been entered:

Jun 24 17:53:38 **** scsi: [ID 583861 kern.info] sd10 at scsi_vhci0: unit-address g600144f0200acb0000004e0505940007: f_tpgs Jun 24 17:53:38 **** genunix: [ID 936769 kern.info] sd10 is /scsi_vhci/disk@g600144f0200acb0000004e0505940007 Jun 24 17:53:38 **** genunix: [ID 408114 kern.info] /scsi_vhci/disk@g600144f0200acb0000004e0505940007 (sd10) online Jun 24 17:53:38 **** genunix: [ID 483743 kern.info] /scsi_vhci/disk@g600144f0200acb0000004e0505940007 (sd10) multipath status: degraded: path 1 iscsi0/disk@0000iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf8566030001,10 is online Jun 24 17:53:38 **** scsi: [ID 583861 kern.info] sd11 at scsi_vhci0: unit-address g600144f0200acb0000004e05059a0008: f_tpgs Jun 24 17:53:38 **** genunix: [ID 936769 kern.info] sd11 is /scsi_vhci/disk@g600144f0200acb0000004e05059a0008 Jun 24 17:53:38 **** genunix: [ID 408114 kern.info] /scsi_vhci/disk@g600144f0200acb0000004e05059a0008 (sd11) online Jun 24 17:53:38 **** genunix: [ID 483743 kern.info] /scsi_vhci/disk@g600144f0200acb0000004e05059a0008 (sd11) multipath status: degraded: path 2 iscsi0/disk@0000iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf8566030001,11 is online Jun 24 17:53:38 **** scsi: [ID 583861 kern.info] sd12 at scsi_vhci0: unit-address g600144f0200acb0000004e0505a40009: f_tpgs Jun 24 17:53:38 **** genunix: [ID 936769 kern.info] sd12 is /scsi_vhci/disk@g600144f0200acb0000004e0505a40009 Jun 24 17:53:38 **** genunix: [ID 408114 kern.info] /scsi_vhci/disk@g600144f0200acb0000004e0505a40009 (sd12) online Jun 24 17:53:38 **** genunix: [ID 483743 kern.info] /scsi_vhci/disk@g600144f0200acb0000004e0505a40009 (sd12) multipath status: degraded: path 3 iscsi0/disk@0000iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf8566030001,12 is online

Wow, a lot of useful information here:

- it says that the target iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603 is online

- it shows the 3 LUNs (disks) for that target (did you noticed the numbers 10, 11 and 12 ? They are the same we used when defining the view)

- it says it "multi path" is in a "degraded" state. At this point this is absolutely normal as we have only one path to the "remote" 3 disks.

And the ultimate test: what would format say? Test it!

# format

Searching for disks...done

c0t600144F0200ACB0000004E0505940007d0: configured with capacity of 2560.00GB

AVAILABLE DISK SELECTIONS:

0. c0t600144F0200ACB0000004E0505A40009d0 <SUN -COMSTAR -1.0 cyl 2607 alt 2 hd 255 sec 63>

/scsi_vhci/disk@g600144f0200acb0000004e0505a40009

1. c0t600144F0200ACB0000004E05059A0008d0 <SUN -COMSTAR -1.0 cyl 65267 alt 2 hd 255 sec 63>

/scsi_vhci/disk@g600144f0200acb0000004e05059a0008

2. c0t600144F0200ACB0000004E0505940007d0 <SUN-COMSTAR-1.0-2.50TB>

/scsi_vhci/disk@g600144f0200acb0000004e0505940007

3. c7t0d0 <ATA-WDC WD30EZRS-00J-0A80-2.73TB>

/pci@0,0/pci1002,4393@11/disk@0,0

4. c7t1d0 <ATA-WDC WD30EZRS-00J-0A80-2.73TB>

/pci@0,0/pci1002,4393@11/disk@1,0

5. c7t2d0 <ATA-WDC WD30EZRS-00J-0A80-2.73TB>

/pci@0,0/pci1002,4393@11/disk@2,0

6. c7t3d0 <ATA-WDC WD30EZRS-00J-0A80-2.73TB>

/pci@0,0/pci1002,4393@11/disk@3,0

7. c7t4d0 <ATA -OCZ-AGILITY2 -1.32 cyl 3914 alt 2 hd 255 sec 63>

/pci@0,0/pci1002,4393@11/disk@4,0

Specify disk (enter its number):

Perfect! You can try to select a disk then partition it if you wish.

When selecting a iSCSI disk you will have to use the fdisk option first if Solaris complains with: WARNING - This disk may be in use by an application that has modified the fdisk table. Ensure that this disk is not currently in use before proceeding to use fdisk. For some reasons (not related to thin provisioning), it appeared that some iSCSI drives appeared with a corrupted partition table.

Phase 4: Mounting a remote volume from a Funtoo Linux box

Now we have a functional Solaris box with several emulated volumes accessed just like they were a DAS (Direct Attached Storage) SCSI hard disks. Now the $100 question: "Once a filesystem has been created on the remote iSCSI volume can I mount it from several hosts of my network?". Answer is: yes you can but only one of them must have the volume mounted as read-write if you intend to use a traditional (ZFS, UFS, EXT2/3/4, Reiser, BTRFS, JFS...) filesystem on it. Things are different if you intend to use the remote iSCSI disks for a cluster and use a filesystem like GFS/OCFS2 or Lustre on them. Clusters dedicated filesystem like GFS/OCFS2/Lustre are designed from the ground up to support concurrent read-write access (distributed locking) from several hosts and are smart enough to avoid data corruption.

Just to be sure you are aware:

Do not mount an iSCSI remote disk in read-write mode from several hosts unless you intend to use the disk with a filesystem dedicated to clusters like GFS/OCFS2 or Lustre. Not respecting this principle will make your data being corrupted.

If you need to share the content of your newly created volume between several hosts of your network, the safest ways is to connect to the iSCSI volume from one of your hosts then configure on this host a NFS share. An alternative strategy is, on the SAN Box, set the sharenfs property (zfs set sharenfs=on /path/of/zvol) on the zvol and mount this NFS share from all of your client hosts. This technique suppose you have formatted the zvol with a filesystem readable by Solaris (UFS or ZFS). Remeber: UFS is not endianness neutral. A UFS filesystem created from a SPARC machine won't be readable on a x86 machine and vice-versa. For us here NFS have limited interest in the present context :-)

Installing requirements

You have at least two choices for setting up an iSCSI initiator:

- sys-block/iscsi-initiator-core-tools

- sys-block/open-iscsi (use at least the version 2.0.872, it contains several bug fixes and includes changes required to be used with Linux 2.6.39 and Linux 3.0). We will use this alternative here.

Quoting Open iSCSI README, Open iSCSI is composed of two parts:

- some kernel modules (built-in in Linux Kernel sources)

- several userland bits:

- A management tool (iscsiadm) for the persistant Open iSCSI database

- A daemon (iscsid) that handle all of the initiator iSCSI magic in the background for you. Its role is implements control path of iSCSI protocol, plus assuming some management facilities like automatically re-start discovery at startup, based on the contents of persistent iSCSI database.

- An IQN identifier generator (iscsi-iname)

Linux kernel configuration

We suppose your Funtoo box is correctly setup and has an operational networking. The very first thing to do is to reconfigure your Linux kernel to enable iSCSI:

- under SCSI low-level drivers, activate iSCSI Initiator over TCP/IP (CONFIG_ISCSI_TCP). This is the Open iSCSI kernel part

- under Cryptographic options, activate Cryptographic API and

- under Library routines, CRC32c (Castagnoli, et al) Cyclic Redundancy-Check should already have been enabled.

Open iSCSI does not use the term node as defined by the iSCSI RFC, where a node is a single iSCSI initiator or target. Open iSCSI uses the term node to refer to a portal on a target, so tools like iscsiadm require that --targetname and --portal argument be used when in node mode.

Configuring the Open iSCSI initiator

Parameters of the Open iSCSI initiator lie in the /etc/iscsi directory:

# ls -l /etc/iscsi total 14 drwxr-xr-x 1 root root 26 Jun 24 22:09 ifaces -rw-r--r-- 1 root root 1371 Jun 12 21:03 initiatorname.iscsi -rw-r--r-- 1 root root 1282 Jun 24 22:08 initiatorname.iscsi.example -rw------- 1 root root 11242 Jun 24 22:08 iscsid.conf drw------- 1 root root 236 Jun 13 00:48 nodes drw------- 1 root root 70 Jun 13 00:48 send_targets

- ifaces: used to bind the Open ISCSI initiator on a specific NIC (created for you by iscsid on the first execution)

- initiatorname.iscsi.example: stores a template to configure the name (IQN) of the iSCSI initiator (copy it as initiatorname.iscsi if that later does not exist)

- iscsid.conf: various configuration parameters (leave the default for now)

- Following directories stores what is called the persistent database in Open iSCSI terminology

- nodes (aka the nodes database): storage of node connection parameters per remote iSCSI target and portal (created for you, you can then change the values)

- send_targets (aka the discovery database): storage of what iscsi daemon discovers using a dicovery protocol per portal and remote iSCSI target. Most of the file there are symlinks to the files lying in the /etc/iscsi/nodes directory plus a file named st_config which stores various parameters.

Out-of-the-box, you don't have to do heavy tweaking. Just make sure that initiatorname.iscsi exists and contains the exact same initiator identifier you specified when defining the Logical Units (LUs) mapping.

Spawning iscsid

The next step consists of starting iscsid:

# /etc/init.d/iscsid start iscsid | * Checking open-iSCSI configuration ... iscsid | * Loading iSCSI modules ... iscsid | * Loading libiscsi ... [ ok ] iscsid | * Loading scsi_transport_iscsi ... [ ok ] iscsid | * Loading iscsi_tcp ... [ ok ] iscsid | * Starting iscsid ... iscsid | * Setting up iSCSI targets ... iscsid |iscsiadm: No records found! [ !! ] [

At this point iscsid complains about not having any record telling about iSCSI targets in its persistent database, this is absolutely normal.

Querying the SAN box for available targets

What the Funtoo box will see when initiating a discovery session on the SAN box? Simple just ask :-)

# iscsiadm -m discovery -t sendtargets -p 192.168.1.14 192.168.1.14:3260,1 iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603 192.168.1.13:3260,1 iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603 [2607:fa48:****:****:****:****:****:****]:3260,1 iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603

Here we get 3 results because the connection lister used by the remote target listens on the two NICs of the SAN box using its IPv4 stack and also on a public IPv6 address (numbers masked for privacy reasons). In that case querying one of the other addresses (192.168.1.13 or 2607:fa48:****:****:****:****:****:****) would give identical results. iscsiadm will record the returned information in its persistent database. The ",1" in the above result is just a target ordinal number, is not related to the LUNs living inside the target.

We have used the "send targets" discovery protocol, it is however possible to use iSNS or use a static discovery (that later is called custom iSCSI portal in Open iSCSI terminology).

Connecting to the remote target

Now the most exciting part! We will initiate an iSCSI session on the remote target ("logging in" the remote target). Since we didn't activate CHAP authentications on the remote target, the process is straightforward:

# iscsiadm -m node -T iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603 -p 192.168.1.13 --login Logging in to [iface: default, target: iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603, portal: 192.168.1.13,3260] Login to [iface: default, target: iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603, portal: 192.168.1.13,3260] successful.

You must use the portal reference exactly as shown by the discovery command above because Open iSCSI recorded it and will lookup for it in its persistent database. Using an IP address instead of a hostname will make Open iSCSI complain ("iscsiadm: no records found!").

The interesting part is in the system log:

# dmesg ... [263902.476582] Loading iSCSI transport class v2.0-870. [263902.486357] iscsi: registered transport (tcp) [263939.655539] scsi7 : iSCSI Initiator over TCP/IP [263940.163643] scsi 7:0:0:10: Direct-Access SUN COMSTAR 1.0 PQ: 0 ANSI: 5 [263940.163772] sd 7:0:0:10: Attached scsi generic sg4 type 0 [263940.165029] scsi 7:0:0:11: Direct-Access SUN COMSTAR 1.0 PQ: 0 ANSI: 5 [263940.165129] sd 7:0:0:11: Attached scsi generic sg5 type 0 [263940.165482] sd 7:0:0:10: [sdc] 5368709120 512-byte logical blocks: (2.74 TB/2.50 TiB) [263940.166249] sd 7:0:0:11: [sdd] 1048576000 512-byte logical blocks: (536 GB/500 GiB) [263940.166254] scsi 7:0:0:12: Direct-Access SUN COMSTAR 1.0 PQ: 0 ANSI: 5 [263940.166355] sd 7:0:0:12: Attached scsi generic sg6 type 0 [263940.167018] sd 7:0:0:10: [sdc] Write Protect is off [263940.167021] sd 7:0:0:10: [sdc] Mode Sense: 53 00 00 00 [263940.167474] sd 7:0:0:11: [sdd] Write Protect is off [263940.167476] sd 7:0:0:11: [sdd] Mode Sense: 53 00 00 00 [263940.167488] sd 7:0:0:12: [sde] 41943040 512-byte logical blocks: (21.4 GB/20.0 GiB) [263940.167920] sd 7:0:0:10: [sdc] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA [263940.168453] sd 7:0:0:11: [sdd] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA [263940.169133] sd 7:0:0:12: [sde] Write Protect is off [263940.169137] sd 7:0:0:12: [sde] Mode Sense: 53 00 00 00 [263940.170074] sd 7:0:0:12: [sde] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA [263940.171402] sdc: unknown partition table [263940.172226] sdd: sdd1 [263940.174295] sde: sde1 [263940.175320] sd 7:0:0:10: [sdc] Attached SCSI disk [263940.175991] sd 7:0:0:11: [sdd] Attached SCSI disk [263940.177275] sd 7:0:0:12: [sde] Attached SCSI disk

If you don't see the logical units appearing, check on the remote side that you have added the initiator to the host group when defining the view entries.

Something we had not dived into so far: if you explore the /dev directory of your Funtoo box you will notice a sub-directory named disk. The content of this directory is not maintained by Open iSCSI but by udev and describes what that later knows about all "SCSI" (iSCSI, SATA, SCSI...) disks:

# ls /dev/disk total 0 drwxr-xr-x 2 root root 700 Jun 25 09:24 by-id drwxr-xr-x 2 root root 60 Jun 22 04:04 by-label drwxr-xr-x 2 root root 320 Jun 25 09:24 by-path drwxr-xr-x 2 root root 140 Jun 22 04:04 by-uuid

Directories are self-explicative:

- by-id: stores the devices by their hardware identifier. You will notice the devices have been identified in several manners and notably just like if they were Fiber Channel/SAS devices (presence of WWN lines). For local disks, their WWN is not random but corresponds to the WWN number shown whenever you query the disk with hdparm -I (see "Logical Unit WWN Device Identifier" section in hdparm output). For iSCSI disks their WWN corresponds to the GUID attributed by COMSTAR when the logical unit was created (see Creating the Logical Units section in above paragraphs).

# ls -l /dev/disk/by-id total 0 lrwxrwxrwx 1 root root 9 Jun 22 04:04 ata-HL-DT-ST_BD-RE_BH10LS30_K9OA4DH1606 -> ../../sr0 lrwxrwxrwx 1 root root 9 Jun 22 04:04 ata-LITE-ON_DVD_SHD-16S1S -> ../../sr1 ... lrwxrwxrwx 1 root root 9 Jun 25 09:24 scsi-3600144f0200acb0000004e0505940007 -> ../../sdc lrwxrwxrwx 1 root root 9 Jun 25 09:24 scsi-3600144f0200acb0000004e05059a0008 -> ../../sdd lrwxrwxrwx 1 root root 9 Jun 25 09:24 scsi-3600144f0200acb0000004e0505a40009 -> ../../sde .... lrwxrwxrwx 1 root root 9 Jun 22 04:04 wwn-0x**************** -> ../../sda lrwxrwxrwx 1 root root 10 Jun 22 08:04 wwn-0x****************-part1 -> ../../sda1 lrwxrwxrwx 1 root root 10 Jun 22 04:04 wwn-0x****************-part2 -> ../../sda2 lrwxrwxrwx 1 root root 10 Jun 22 04:04 wwn-0x****************-part4 -> ../../sda4 lrwxrwxrwx 1 root root 9 Jun 25 09:24 wwn-0x600144f0200acb0000004e0505940007 -> ../../sdc lrwxrwxrwx 1 root root 9 Jun 25 09:24 wwn-0x600144f0200acb0000004e05059a0008 -> ../../sdd lrwxrwxrwx 1 root root 9 Jun 25 09:24 wwn-0x600144f0200acb0000004e0505a40009 -> ../../sde

- by-label: stores the storage devices by their label (volume name). Optical media and ext2/3/4 partitions (tune2fs -L ...) are typically labelled. If a device has not been labelled with a name it won't show up there:

# ls -l /dev/disk/by-label total 0 lrwxrwxrwx 1 root root 9 Jun 22 04:04 DATA_20110621 -> ../../sr1 lrwxrwxrwx 1 root root 10 Jun 25 10:41 boot-partition -> ../../sda1

- by-path: stores the storage devices by their physical path. For DAS (Direct Attached Storage) devices paths refers to something under /sys. For iSCSI devices, they are referred to with the target name where the logical unit resides and the LUN of that later is used (notice the LUN nu,ber, it is the same one you have attributed when creating the view on the remote iSCSI node):

# ls -l /dev/disk/by-path total 0 lrwxrwxrwx 1 root root 9 Jun 25 09:24 ip-192.168.1.13:3260-iscsi-iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603-lun-10 -> ../../sdc lrwxrwxrwx 1 root root 9 Jun 25 09:24 ip-192.168.1.13:3260-iscsi-iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603-lun-11 -> ../../sdd lrwxrwxrwx 1 root root 9 Jun 25 09:24 ip-192.168.1.13:3260-iscsi-iqn.1986-03.com.sun:02:2e5faacb-4bdf-4f7f-e643-ebc8bf856603-lun-12 -> ../../sde lrwxrwxrwx 1 root root 9 Jun 22 04:04 pci-0000:00:1f.2-scsi-0:0:0:0 -> ../../sda lrwxrwxrwx 1 root root 10 Jun 25 10:41 pci-0000:00:1f.2-scsi-0:0:0:0-part1 -> ../../sda1 lrwxrwxrwx 1 root root 10 Jun 22 04:04 pci-0000:00:1f.2-scsi-0:0:0:0-part2 -> ../../sda2 lrwxrwxrwx 1 root root 10 Jun 22 04:04 pci-0000:00:1f.2-scsi-0:0:0:0-part4 -> ../../sda4 lrwxrwxrwx 1 root root 9 Jun 22 04:04 pci-0000:00:1f.2-scsi-0:0:1:0 -> ../../sr0 lrwxrwxrwx 1 root root 9 Jun 22 04:04 pci-0000:00:1f.2-scsi-1:0:0:0 -> ../../sdb ...

- by-uuid: a bit similar to /dev/disk/by-label but disks are reported by their UUID (if defined). In the the following example only /dev/sda has some UUIDs defined (this disk holds several BTRFS partitions which have been automatically given an UUID at their creation time):

# ls -l /dev/disk/by-uuid total 0 lrwxrwxrwx 1 root root 10 Jun 22 04:04 01178c43-7392-425e-8acf-3ed16ab48813 -> ../../sda4 lrwxrwxrwx 1 root root 10 Jun 22 04:04 1701af39-8ea3-4463-8a77-ec75c59e716a -> ../../sda2 lrwxrwxrwx 1 root root 10 Jun 25 10:41 5432f044-faa2-48d3-901d-249275fa2976 -> ../../sda1

Using the remote LU

For storage devices > 2.19TB capacity, do not use traditional partitioning tools like fdisk/cfdisk, use a GPT partitioning tool like gptfdisk (sys-apps/gptfdisk).

At this point the most difficult part has been done, you can now use the remote iSCSI just if they were DAS devices. Let's demonstrate with one:

# gdisk /dev/sdc

GPT fdisk (gdisk) version 0.7.1

Partition table scan:

MBR: not present

BSD: not present

APM: not present

GPT: not present

Creating new GPT entries.

Command (? for help): n

Partition number (1-128, default 1):

First sector (34-5368709086, default = 34) or {+-}size{KMGTP}:

Information: Moved requested sector from 34 to 2048 in

order to align on 2048-sector boundaries.

Use 'l' on the experts' menu to adjust alignment

Last sector (2048-5368709086, default = 5368709086) or {+-}size{KMGTP}:

Current type is 'Linux/Windows data'

Hex code or GUID (L to show codes, Enter = 0700):

Changed type of partition to 'Linux/Windows data'

Command (? for help): p

Disk /dev/sdc: 5368709120 sectors, 2.5 TiB

Logical sector size: 512 bytes

Disk identifier (GUID): 6AEC03B6-0047-44E1-B6C7-1C0CBC7C4CE6

Partition table holds up to 128 entries

First usable sector is 34, last usable sector is 5368709086

Partitions will be aligned on 2048-sector boundaries

Total free space is 2014 sectors (1007.0 KiB)

Number Start (sector) End (sector) Size Code Name

1 2048 5368709086 2.5 TiB 0700 Linux/Windows data

Command (? for help): w

Final checks complete. About to write GPT data. THIS WILL OVERWRITE EXISTING

PARTITIONS!!

Do you want to proceed? (Y/N): yes

OK; writing new GUID partition table (GPT).

The operation has completed successfully.

# gdisk -l /dev/sdc

GPT fdisk (gdisk) version 0.7.1

Partition table scan:

MBR: protective

BSD: not present

APM: not present

GPT: present

Found valid GPT with protective MBR; using GPT.

Disk /dev/sdc: 5368709120 sectors, 2.5 TiB

Logical sector size: 512 bytes

Disk identifier (GUID): 6AEC03B6-0047-44E1-B6C7-1C0CBC7C4CE6

Partition table holds up to 128 entries

First usable sector is 34, last usable sector is 5368709086

Partitions will be aligned on 2048-sector boundaries

Total free space is 2014 sectors (1007.0 KiB)

Number Start (sector) End (sector) Size Code Name

1 2048 5368709086 2.5 TiB 0700 Linux/Windows data

Notice COMSTAR emulates a disk having the traditional 512 bytes/sector division.

Now have a look again in /dev/disk/by-id:

# ls -l /dev/disk/by-id ... lrwxrwxrwx 1 root root 9 Jun 25 13:23 wwn-0x600144f0200acb0000004e0505940007 -> ../../sdc lrwxrwxrwx 1 root root 10 Jun 25 13:33 wwn-0x600144f0200acb0000004e0505940007-part1 -> ../../sdc1 ...